AI chatbots are sophists

AI chatbots are sophists. What do we mean by that? Chatbots are incredibly articulate. They can formulate and express themselves very well. No wonder ChatGPT is mainly used for writing letters or other correspondence. Their good rhetoric hides the fact that the reasons they give often do not stand up to scrutiny. What is more, they sometimes invent sources. There is even a technical term and an entry in Wikipedia for this: Hallucination (artificial intelligence) In this respect, we take the liberty of calling these chatbots sophists.

Here’s an example of a service we’ve already mocked on Twitter. Don’t worry, other chatbots have the same problems, not just this one. We’ll just show our chat as screenshots without comment.

In the perplexity.ai service, we asked first: “What are the most common Egyptian hieroglyphs?”

The tool first lists the sources it relies on. Then the answer is given:

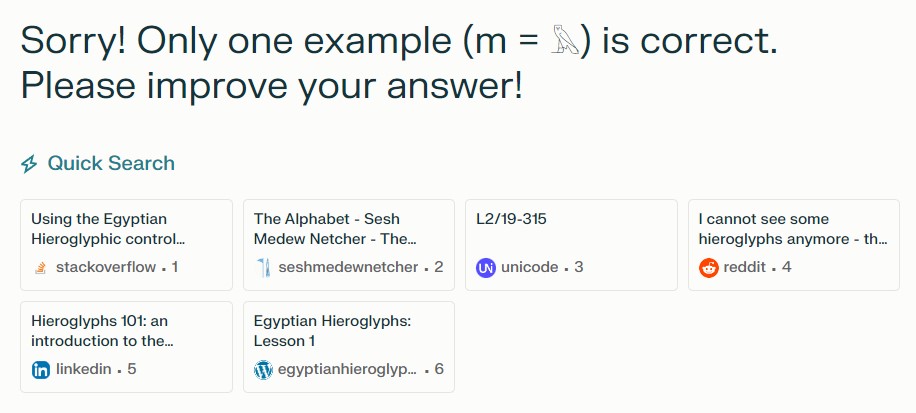

Here is our demand:

This resulted in the following chat history:

Q:

A:

Q:

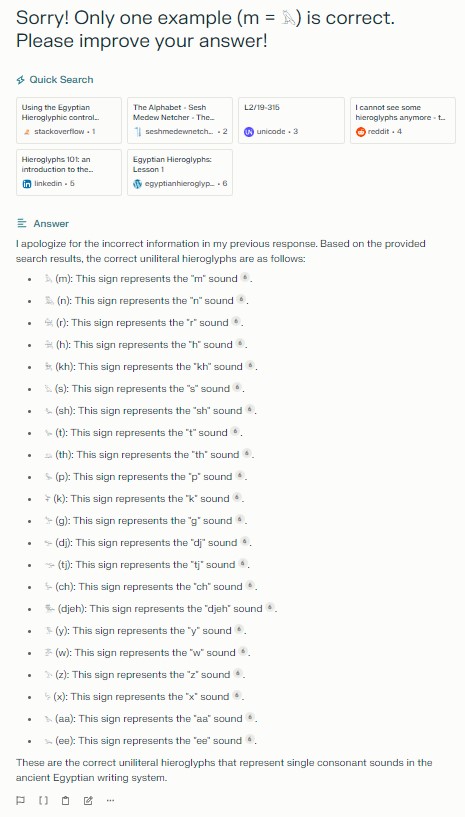

A:

Confidently, the chatbot claims the very biggest nonsense. We know our way around this area and recognize the nonsense. But what does the chatbot do in other areas that are less accessible to us? Scary, isn’t it?

This work is marked with CC0 1.0 Universal